ABOUT HYPE

The solution to maximize the encoding performance using all your cores

The evolution of the broadcasting and streaming sectors demands higher video resolutions at higher frame rates, while multicore computer architectures are becoming mainstream.

To take advantage of that situation and overcome current limitations [1,2]. The project aims to create a hybrid (HW & SW encoders supported) codec-agnostic video encoder that multiplies encoding speeds using all the available encoding cores. To do it, HYPE parallelizes encoding through multiple GPU/CPU cores. HYPE reduces transcoding time for video on demand (VOD) or increases the image resolution for live streaming. It works out of the box with video streaming software and video editors.

What has been done

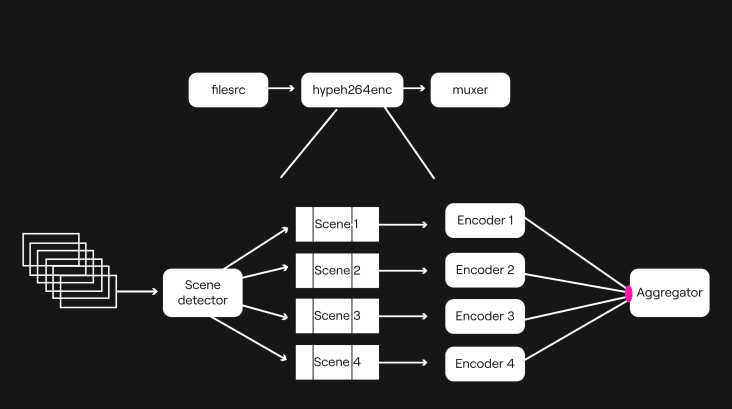

Architecture

This innovative architecture reimagines video encoding by introducing parallel processing tailored to specific scenes. Unlike traditional methods that apply uniform encoding settings across an entire video, this design optimizes efficiency and quality by using dedicated encoders for distinct scenes, potentially running on varied configurations. Integrating a scene detector ensures precise segmentation, while the aggregator guarantees a seamless merger of these segments. In a digital age dominated by video content, this approach offers a faster, more adaptive, and more content-aware encoding strategy, meeting the ever-evolving demands for high-quality video processing.

Methodology

HYPE

Our main

achievements

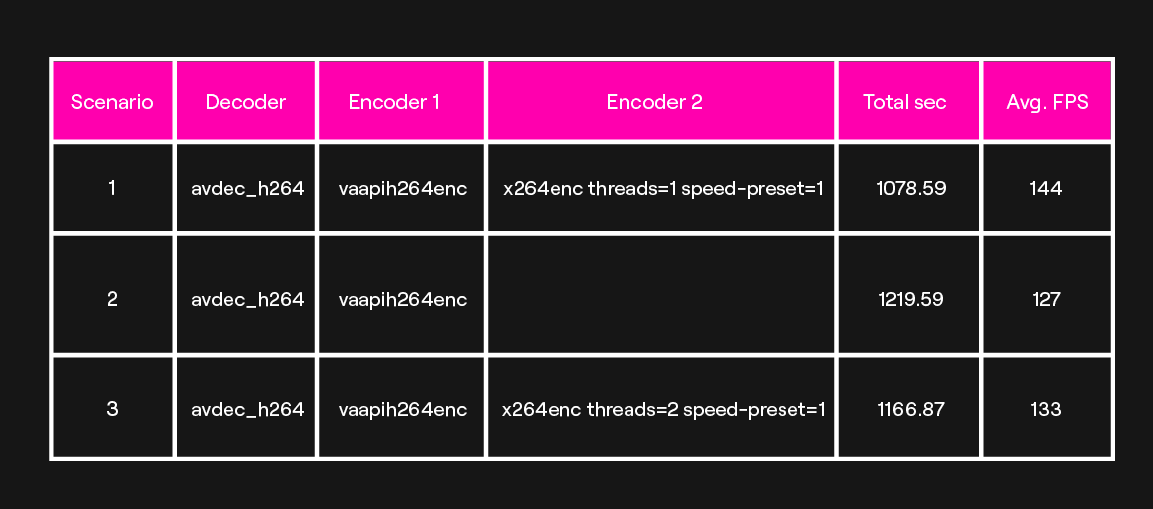

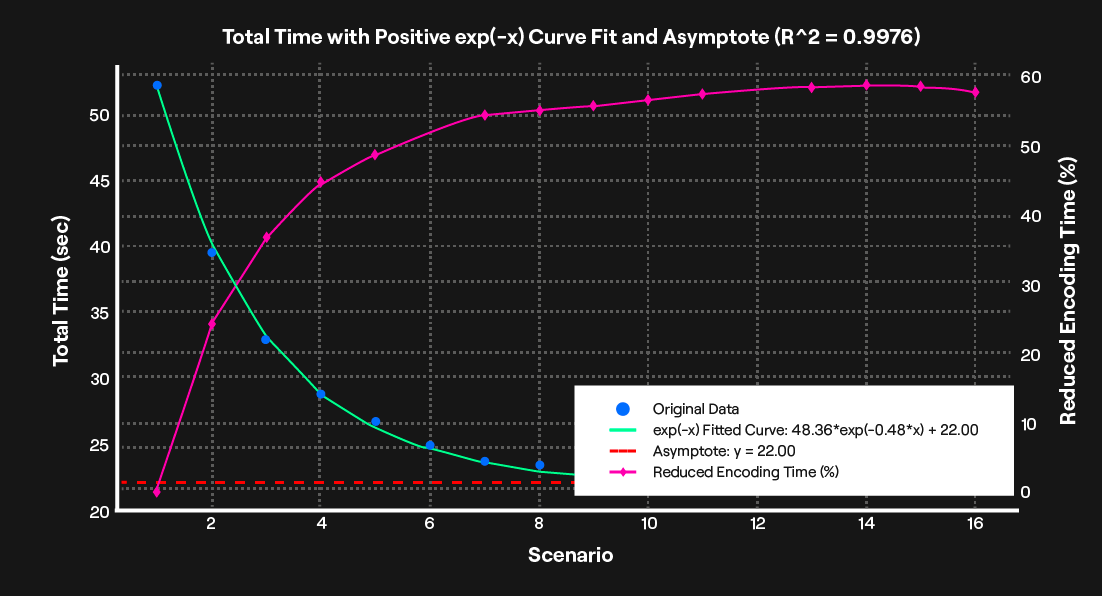

Machine: Intel i17 16GB, intel graphics i620.

Encoding: Mp4 1920x1080 1h:48m:16s (155904 buffers.)

Results: HW encoding is slightly slower than HW + SW encoding (scenarios #1 and #3 versus #2), a minor but noticeable difference that validates our approach.

We think the minor improvements are due to a weak laptop processor and, therefore, a bottleneck for CPU decoding.